Skills:

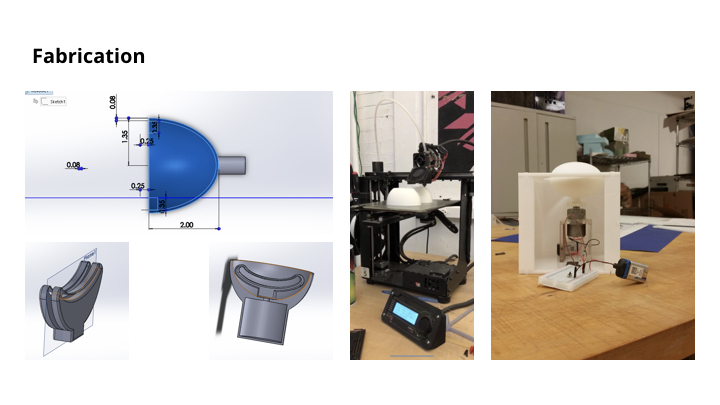

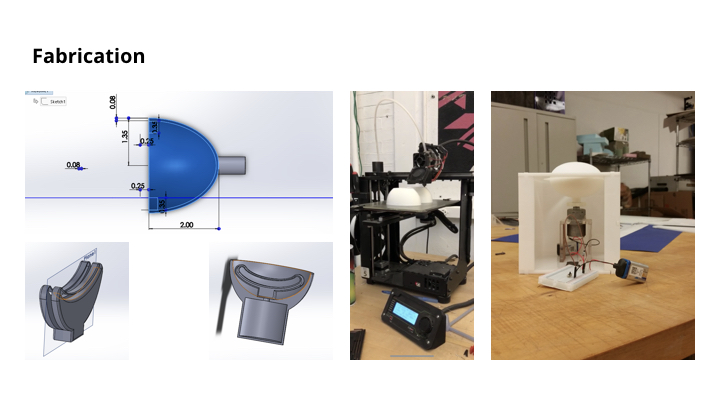

Physical Prototyping

Digital Fabrication

Fusion 360

Arduino

-

Photography

Spring 2018

Team members:

Deepika Mittal

Tatyana Mustakos

Tedo Chou

Professor:

Henny Admoni

Pebble;

If Intelligent Assistants had Motion

Pebble is a project that explores motion as a valuable part of our ever evolving relationship with digital assistants. Based in understanding the relationship of people and robots, this project takes on three main components: research, a study with insights, and a final form design. Pebble, as a result, is a hardware interface and interaction design proposal for a motion-supported assistant speaker.

Opportunity

Physical motion is an uncommon and rare method of interaction for Assistants. Without the constraints of shipment and manufacturing, this project explores motion as a hardware interaction opportunity.

Goal

Assess the hardware communication limitations of current assistants today, the potential of motion as a method of communication, and create an initial design proposal that integrates those findings.

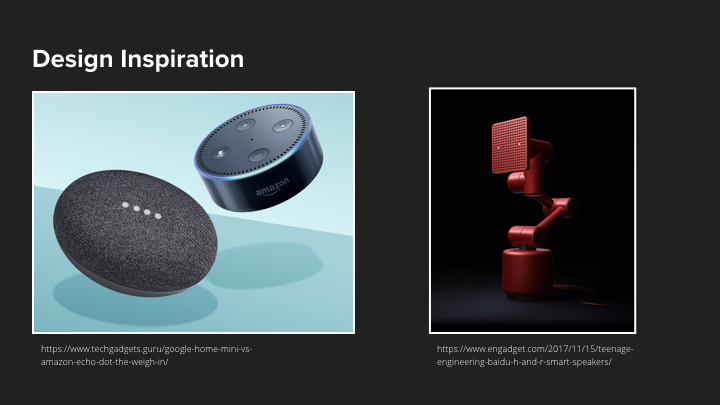

Inspiration and Current States

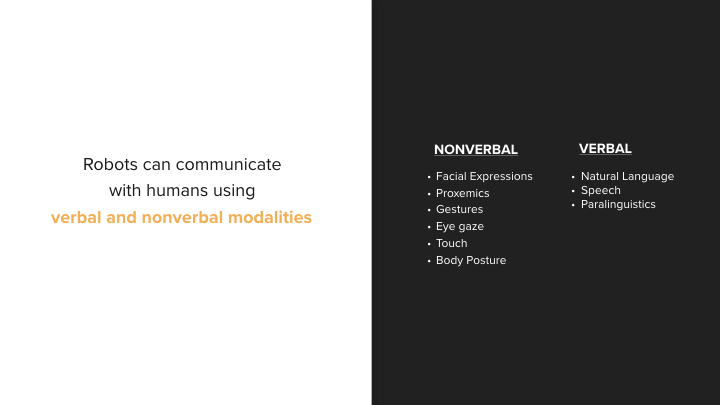

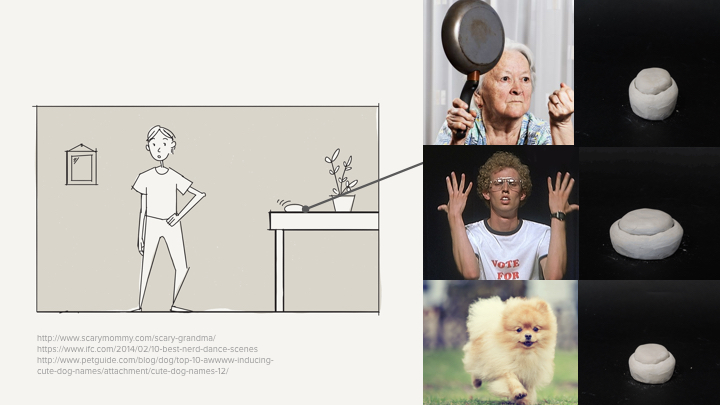

Demonstrated in many forms, motion serves as a way to exemplify character, personality, and action.

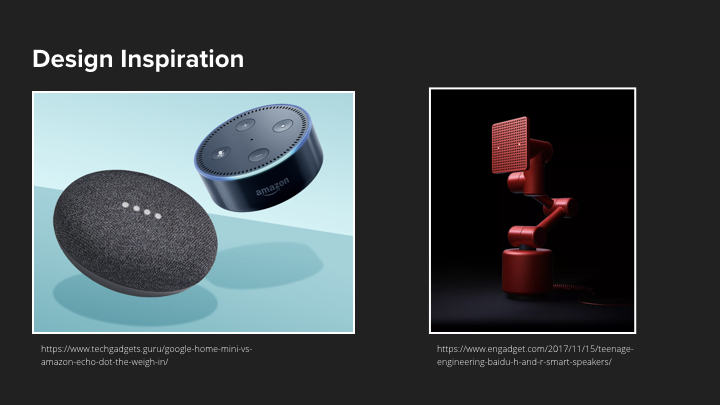

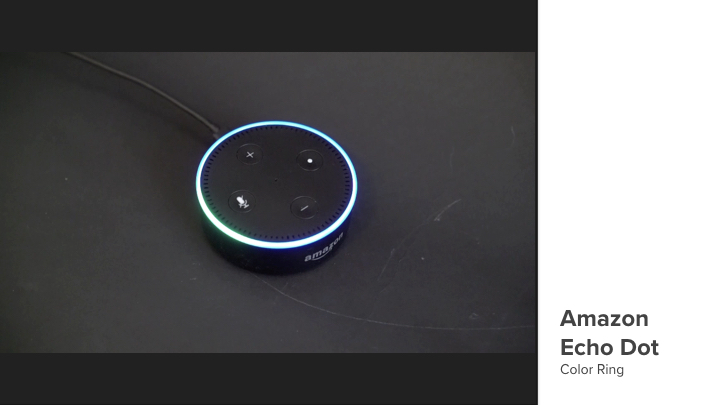

Our team took inspiration from the world of animation as well as current assistant forms that integrate motion (examples include Jibo and Raven H which incorporate physical motion, and the light animations of both google mini and dot).

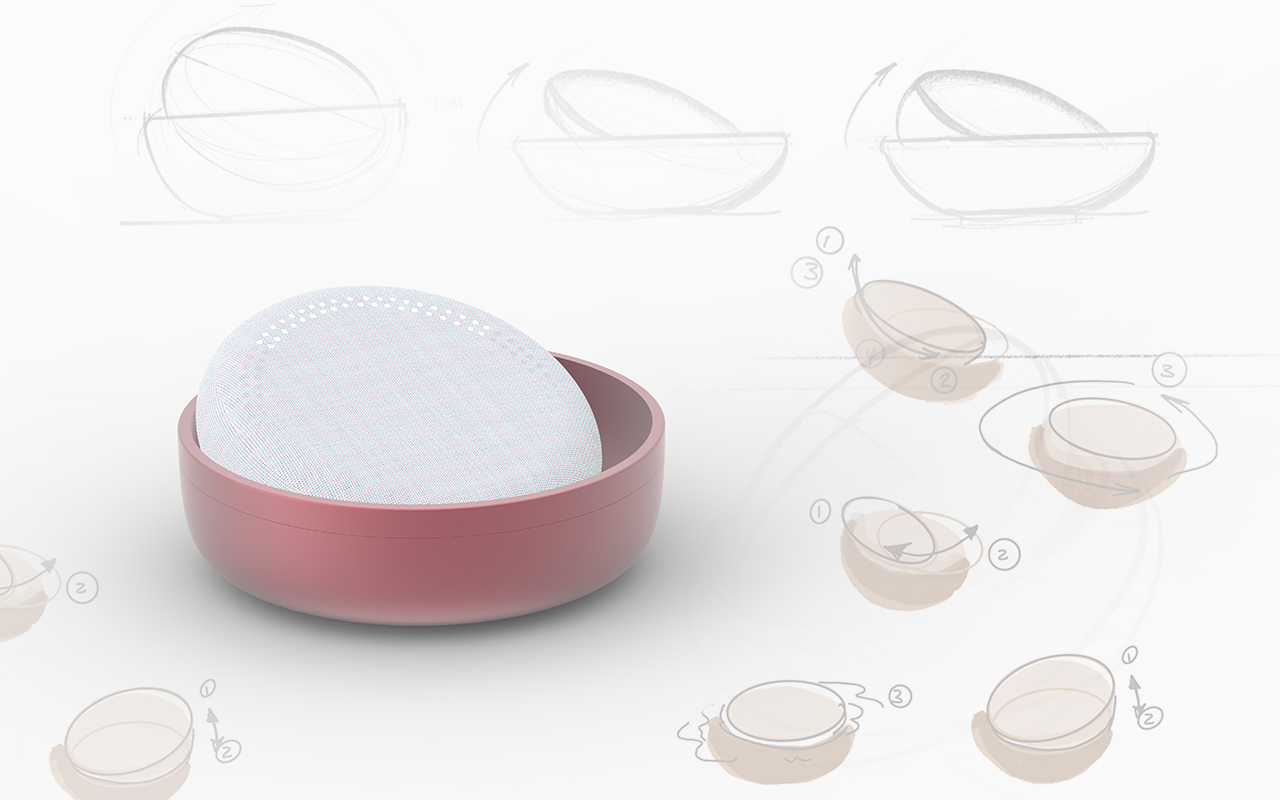

Form Design

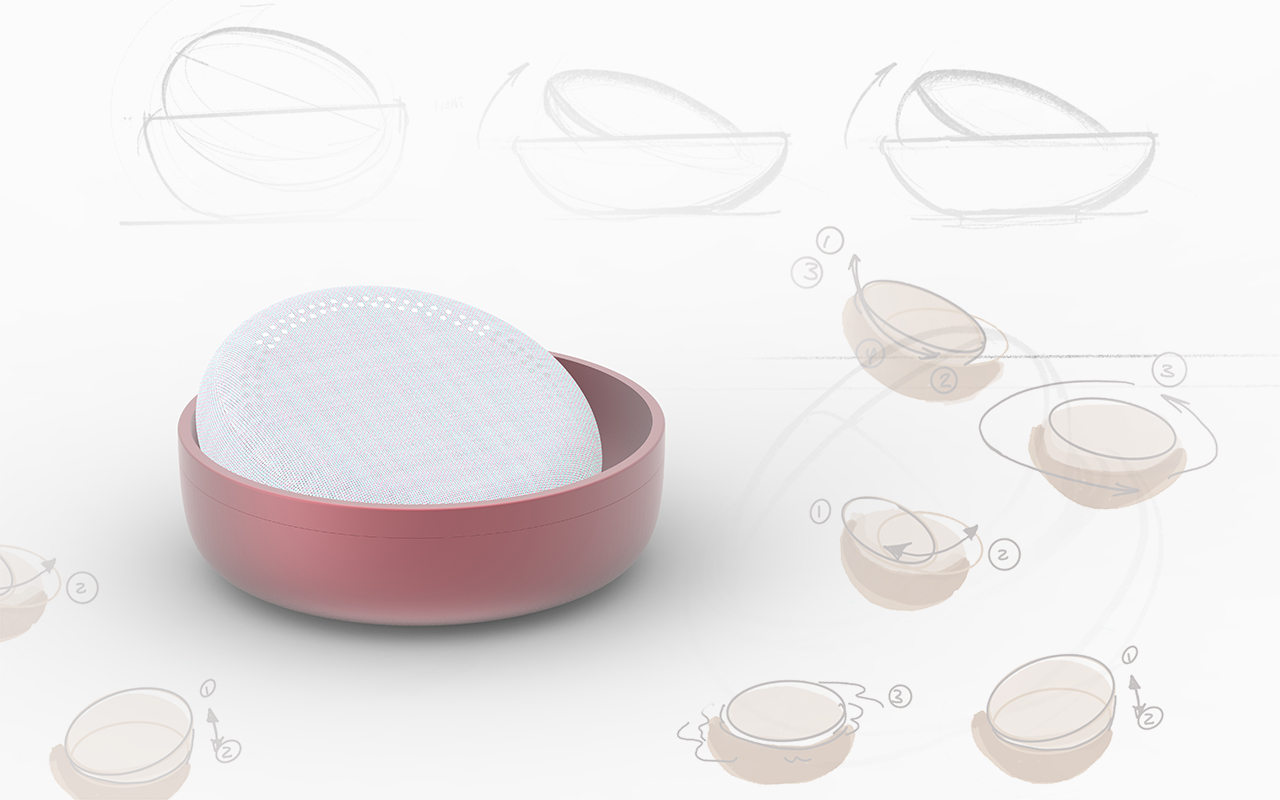

sketch rendering of final form

Our team aimed to create a form which hinted at movement but was similar in design to current assistant speakers in market. We created a simple design form consisting of two parts: a head for movement and a base body.

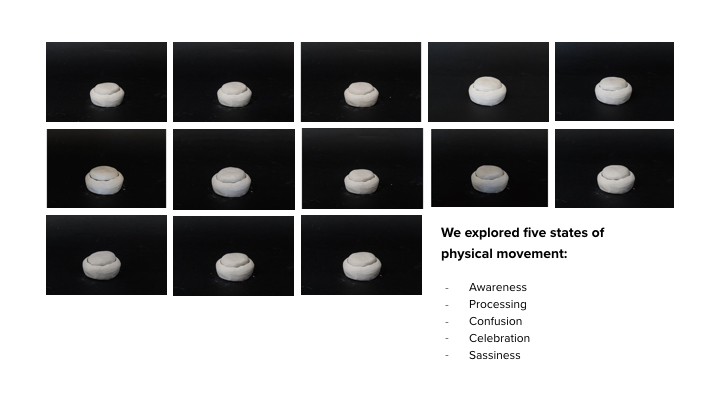

Lo-fi Prototyping

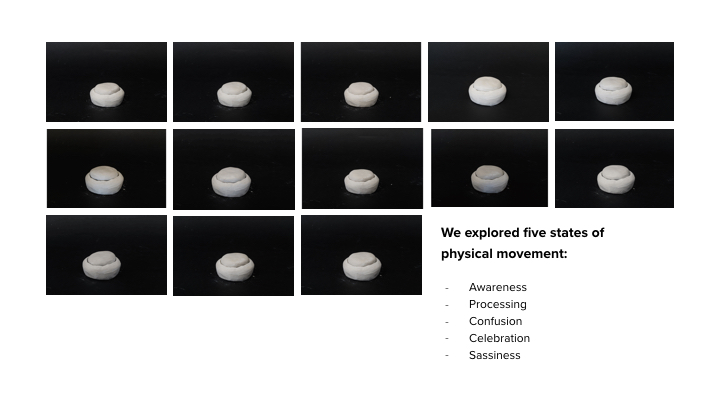

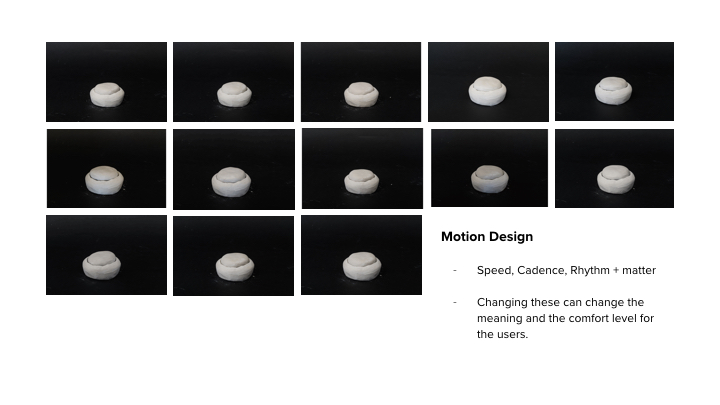

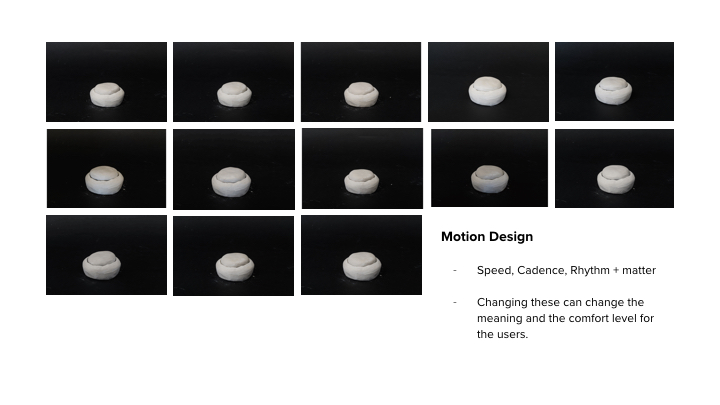

In parallel to creating a clay model for higher fidelity aspirations, our team prototyped low fidelity stop-motion animations to quickly test ways our assistant would incorporate motion.

We prototyped five different expression states— three which serve to communicate function (awareness, processing, and confusion) and two which serve to communicate emotion (sassiness and celebration).

Awareness

When a user invokes Pebble, these motions demonstrate Pebble's awareness of the user and their place in space.

Processing

When a query is being processed, these motions exemplify Pebble's 'thinking' states.

Confusion

Motions generated for when Pebble doesn't understand queries or can't take proper action.

Sassiness

Using motion to express sassiness as a state of personality.

Celebration

Using motion to express celebration as a state of personality.

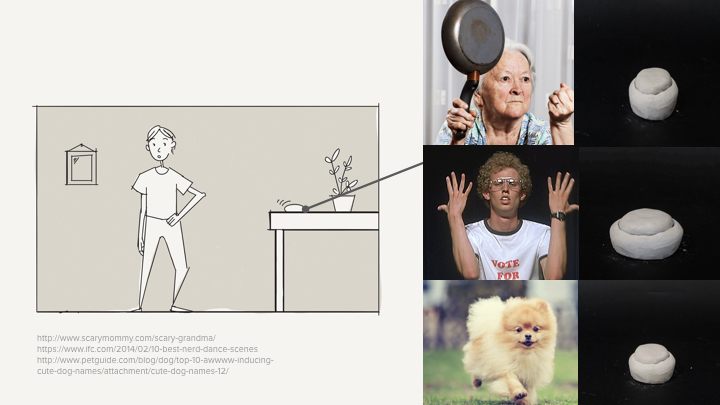

User Studies

Study Intent

After prototyping animations, our team conducted eight interviews through casual conversation. We designed a deck of both the original light animations of the google mini and our animations of the Pebble. Our goal was to asses the following four areas...

The perception of light animation (in google mini) compared to physical movement (in Pebble) as a means of nonverbal communication.

The perception of each physical motion study, and the reasons for each preferred sketch per expression.

The accuracy of each sketch to its respective expression.

If physical movement affects the perception of their potential social relationship with subjects' personal assistants.

Study Format & Questions

The format of our study was conducted through casual interviews looking at the five categories of expression, without any beforehand knowledge for testers in order to eliminate bias.

After a short introduction, each section would begin with first having testers describe the interaction motion they see, and guess what the expression state is. Afterwards, we would compare the different versions of the same interaction, and finally compare the Google mini parallel.

The original slides used for our user study can be found here: https://docs.google.com/presentation/d/18msiAbF9MaqVvXR9L1pHnwRBBnplZQviRdQ_4RYXOPI/edit?usp=sharing

Insights

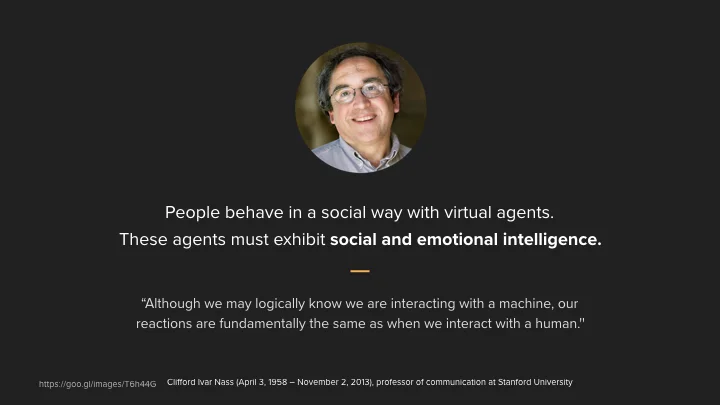

1. Motion is more communicative socially and emotionally

Throughout the study, we found testers consistently used sound and metaphor as descriptors for the interaction designs. Doing so hints at a perception of liveliness that is more dynamic socially and emotionally.

Compared to the functional methods of the light motions in google mini— motion drove responses like the following:

"Oh oh, it's dancing like it's at a club! Like 'unce! unce! unce!'"

"This one's going 'woooooo~~~~~'"

"This reminds me of a little chicken"

2. When and where someone interacts with a motion-based device matters

Motion, depending on context, can be determined as social or as intrusive. Depending on the intent and distance between users and their device.

Multiple testers expressed desire to “have a conversation” with the device if it had motion.

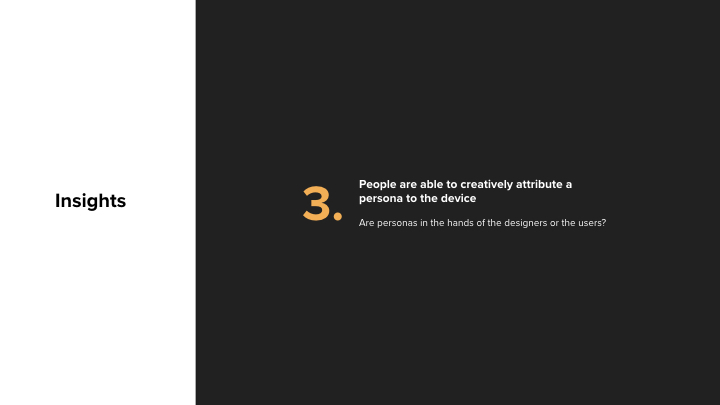

3. People are able to attribute personas to motion-based devices

Across the board, users consistently designated metaphor to personify the potential device. This hints to extremely dynamic and complex perceptions beyond current standards, but were consistently different depending on the person.

Limitations

1. We were comparing our low fidelity prototypes with a highly finished working product.

This mismatch in fidelity affected user’s responses— while we did our best to acknowledge the differences and propose a 'what if', it does not erase the bias completely.

2. The google mini slides consisted of two modalities: audio and visual (light animation in the four dots) while our motion sketches used only one: motion.

We asked users to imagine the motion with the same audio as in the google mini, but this doesn't take into account the fidelity bias of comparing the two.